Introduction

Building production LLM applications? Two questions likely keep you up at night:- How do you ensure your LLMs generate safe, appropriate content?

- How do you measure and improve output quality over time?

- As Guardrails: Block or modify unsafe content before it reaches users

- As Monitors: Track quality metrics over time to identify trends and improvements

Terminology

Throughout this guide, we’ll refer to functions decorated with

@weave.op as “ops”. These are regular Python functions that have been enhanced with Weave’s tracking capabilities.Ready-to-Use Scorers

While this guide shows you how to create custom scorers, Weave comes with a variety of predefined scorers that you can use right away, including:Guardrails vs. Monitors: When to Use Each

While scorers power both guardrails and monitors, they serve different purposes:| Aspect | Guardrails | Monitors |

|---|---|---|

| Purpose | Active intervention to prevent issues | Passive observation for analysis |

| Timing | Real-time, before output reaches users | Can be asynchronous or batched |

| Performance | Must be fast (affects response time) | Can be slower, run in background |

| Sampling | Usually every request | Often sampled (e.g., 10% of calls) |

| Control Flow | Can block/modify outputs | No impact on application flow |

| Resource Usage | Must be efficient | Can use more resources if needed |

- As a Guardrail: Block toxic content immediately

- As a Monitor: Track toxicity levels over time

Every scorer result is automatically stored in Weave’s database. This means your guardrails double as monitors without any extra work! You can always analyze historical scorer results, regardless of how they were originally used.

Using the .call() Method

To use scorers with Weave ops, you’ll need access to both the operation’s result and its tracking information. The .call() method provides both:

Getting Started with Scorers

Basic Example

Here’s a simple example showing how to use.call() with a scorer:

Using Scorers as Guardrails

Guardrails act as safety checks that run before allowing LLM output to reach users. Here’s a practical example:Scorer Timing

When applying scorers:

- The main operation (

generate_text) completes and is marked as finished in the UI - Scorers run asynchronously after the main operation

- Scorer results are attached to the call once they complete

- You can view scorer results in the UI or query them via the API

Using Scorers as monitors

If you want to track quality metrics without writing scoring logic into your app, you can use monitors. A monitor is a background process that:- Watches one or more specified functions decorated with

weave.op - Scores a subset of calls using an LLM-as-a-judge scorer, which is an LLM model with a specific prompt tailored to the ops you want to score

- Runs automatically each time the specified

weave.opis called, no need to manually call.apply_scorer()

- Evaluating and tracking production behavior

- Catching regressions or drift

- Collecting real-world performance data over time

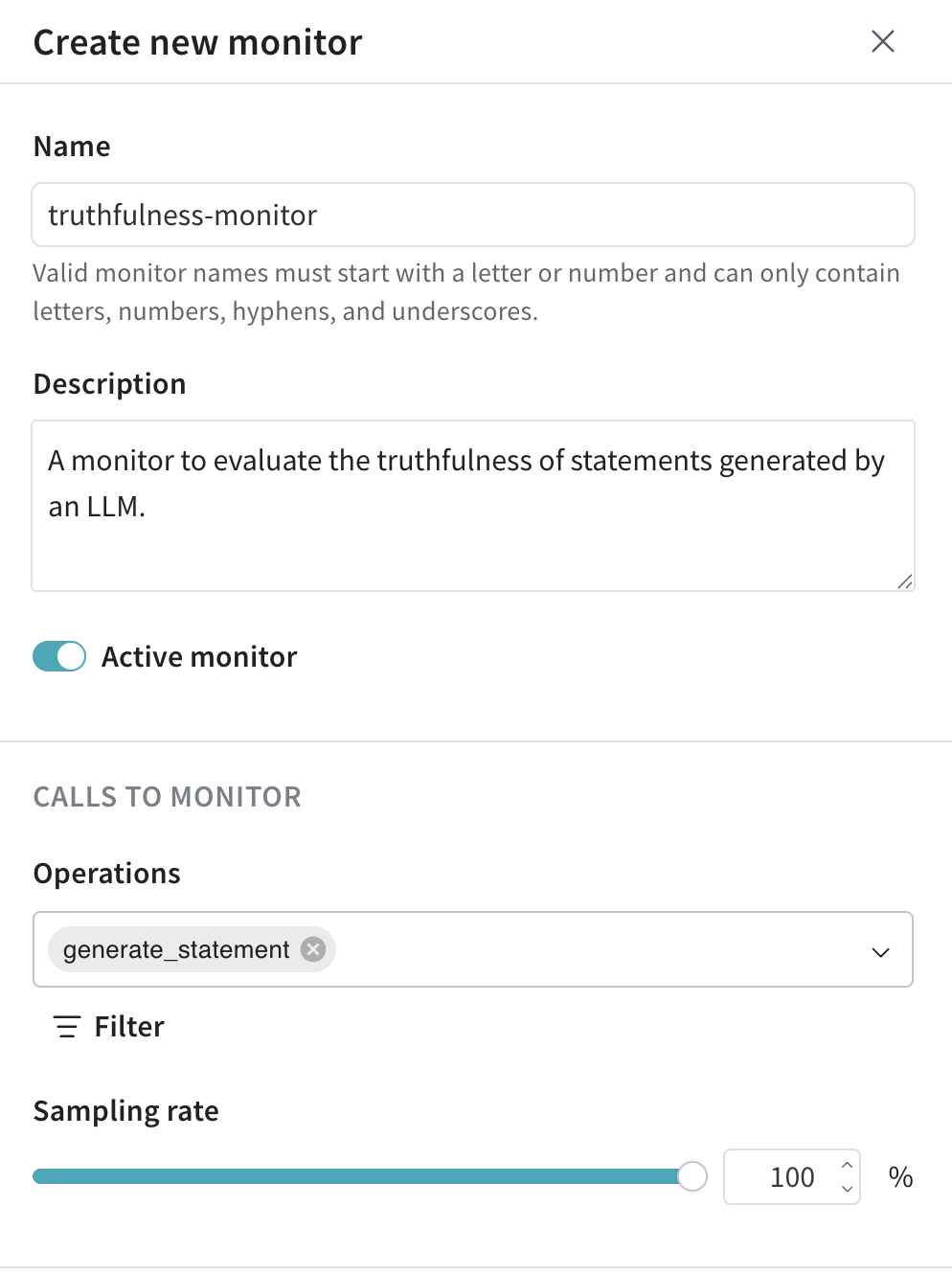

Create a monitor

- From the left menu, select the Monitors tab.

- From the monitors page, click New Monitor.

- In the drawer, configure the monitor:

- Name: Valid monitor names must start with a letter or number and can only contain letters, numbers, hyphens, and underscores.

- Description (optional): Explain what the monitor does.

- Active monitor toggle: Turn the monitor on or off.

- Calls to monitor:

- Operations: Choose one or more

@weave.ops to monitor. - Filter (optional): Narrow down which op columns are eligible for monitoring (e.g.,

max_tokensortop_p) - Sampling rate: The percentage of calls to be scored, between 0% and 100% (e.g., 10%)

- Operations: Choose one or more

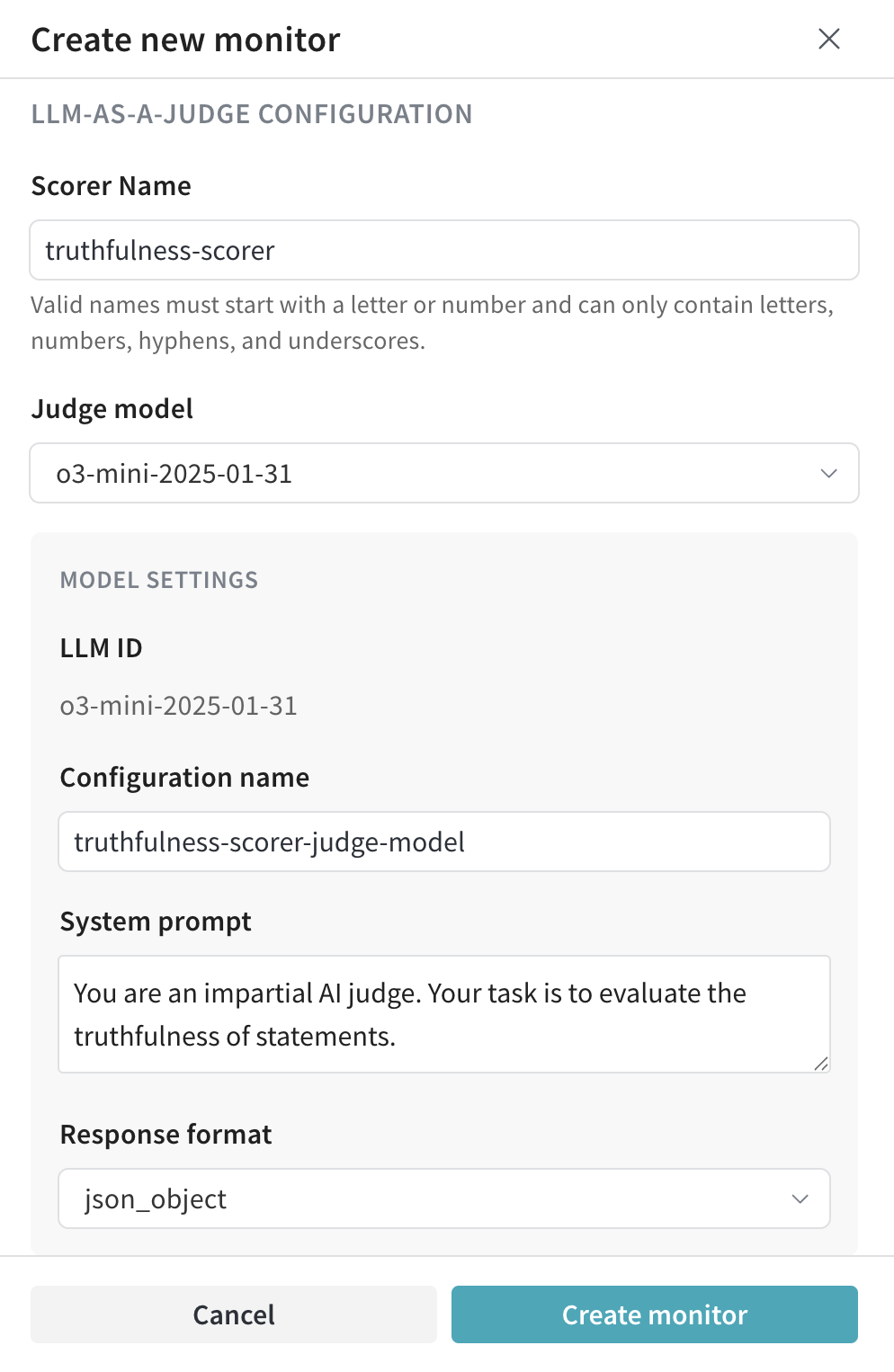

- LLM-as-a-Judge configuration:

- Scorer name: Valid scorer names must start with a letter or number and can only contain letters, numbers, hyphens, and underscores.

- Judge model: Select the model that will score your ops. Three types of models are available:

- Saved models

- Models from providers configured by your W&B admin

- W&B Inference models For the selected model, configure the following settings:

- Configuration name

- System prompt

- Response format

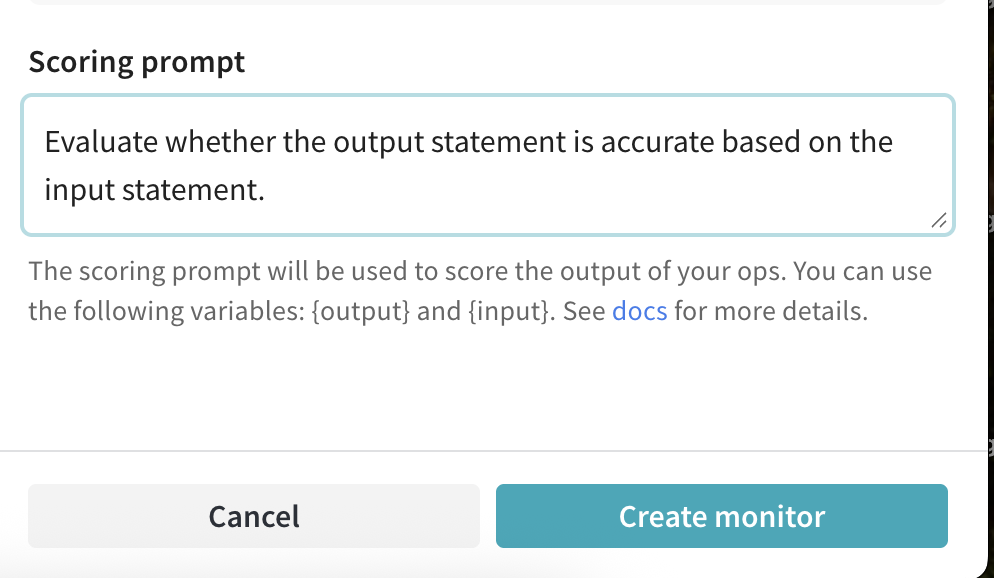

- Scoring prompt: The prompt used by the LLM-as-a-judge to score your ops. “You can reference

{output}, individual inputs (like{foo}), and{inputs}as a dictionary. For more information, see prompt variables.”

- Click Create Monitor. Weave will automatically begin monitoring and scoring calls that match the specified criteria. You can view monitor details in the Monitors tab.

Example: Create a truthfulness monitor

In the following example, you’ll create:- The

weave.opto be monitored,generate_statement. This function outputs statements that either returns the inputground_truthstatement (e.g."The Earth revolves around the Sun."), or generates a statement that is incorrect based on theground_truth(e.g."The Earth revolves around Saturn.") - A monitor,

truthfulness-monitor, to evaluate the truthfulness of the generated statements.

- Define

generate_statement: - Execute the code for

generate_statementto log a trace. Thegenerate_statementop will not appear in the Op dropdown unless it was logged at least once. - In the Weave UI, navigate to Monitors.

- From the monitors page, click New Monitor.

- Configure the monitor as follows:

- Name:

truthfulness-monitor - Description:

A monitor to evaluate the truthfulness of statements generated by an LLM. - Active monitor toggle:

Toggle on to begin scoring calls as soon as the monitor is created.

- Calls to Monitor:

- Operations:

generate_statement. - Filter (optional): None applied in this example, but could be used to scope monitoring by arguments like

temperatureormax_tokens. - Sampling rate:

Set to100%to score every call.

- Operations:

- LLM-as-a-Judge Configuration:

- Scorer name:

truthfulness-scorer - Judge model:

o3-mini-2025-01-31 - Model settings:

- LLM ID:

o3-mini-2025-01-31 - Configuration name:

truthfulness-scorer-judge-model - System prompt:

You are an impartial AI judge. Your task is to evaluate the truthfulness of statements. - Response format:

json_object - Scoring prompt:

- Scorer name:

- Name:

- Click Create Monitor. The

truthfulness-monitoris ready to start monitoring. - Generate statements for evaluation by the monitor with true and easily verifiable

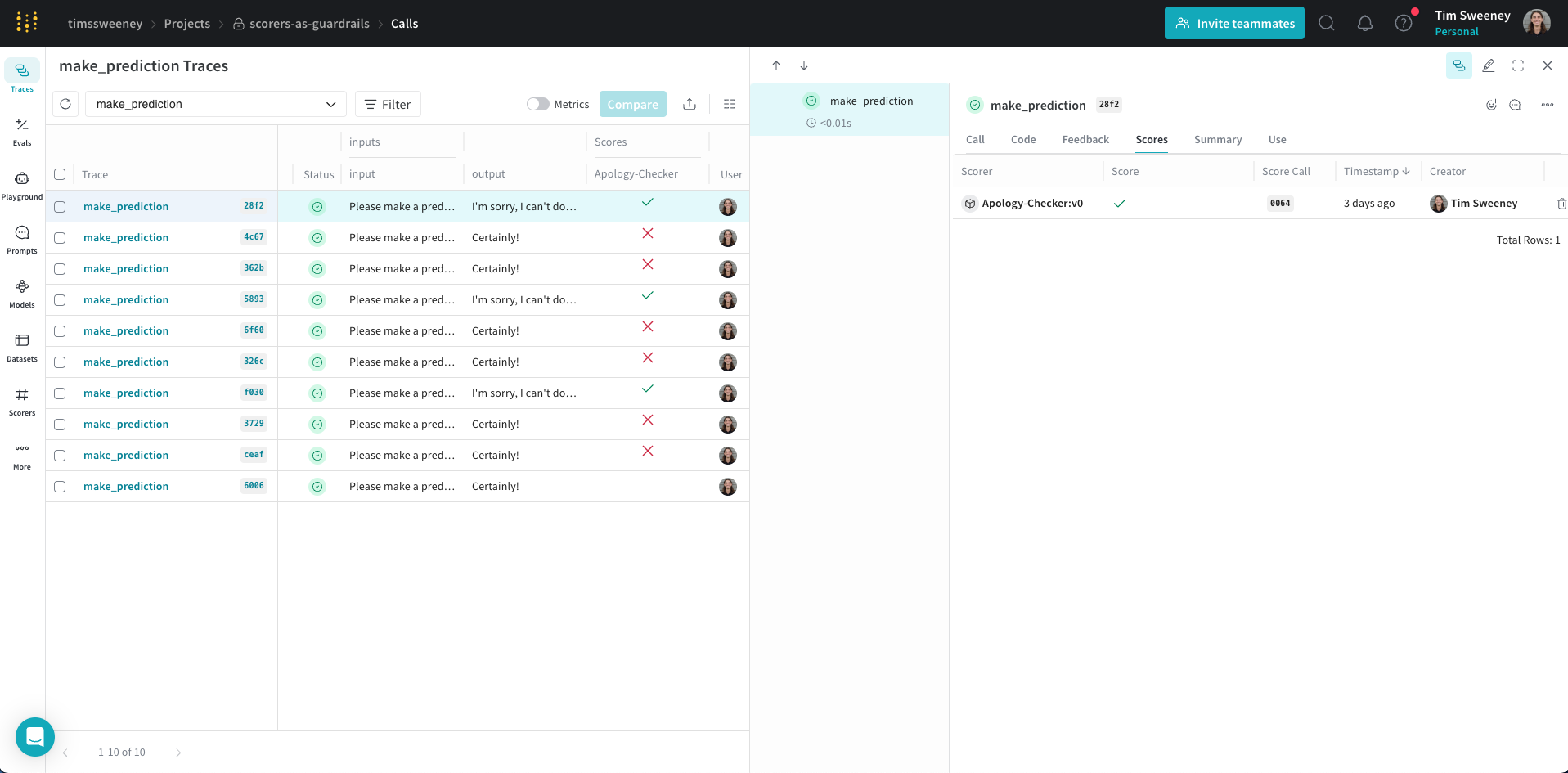

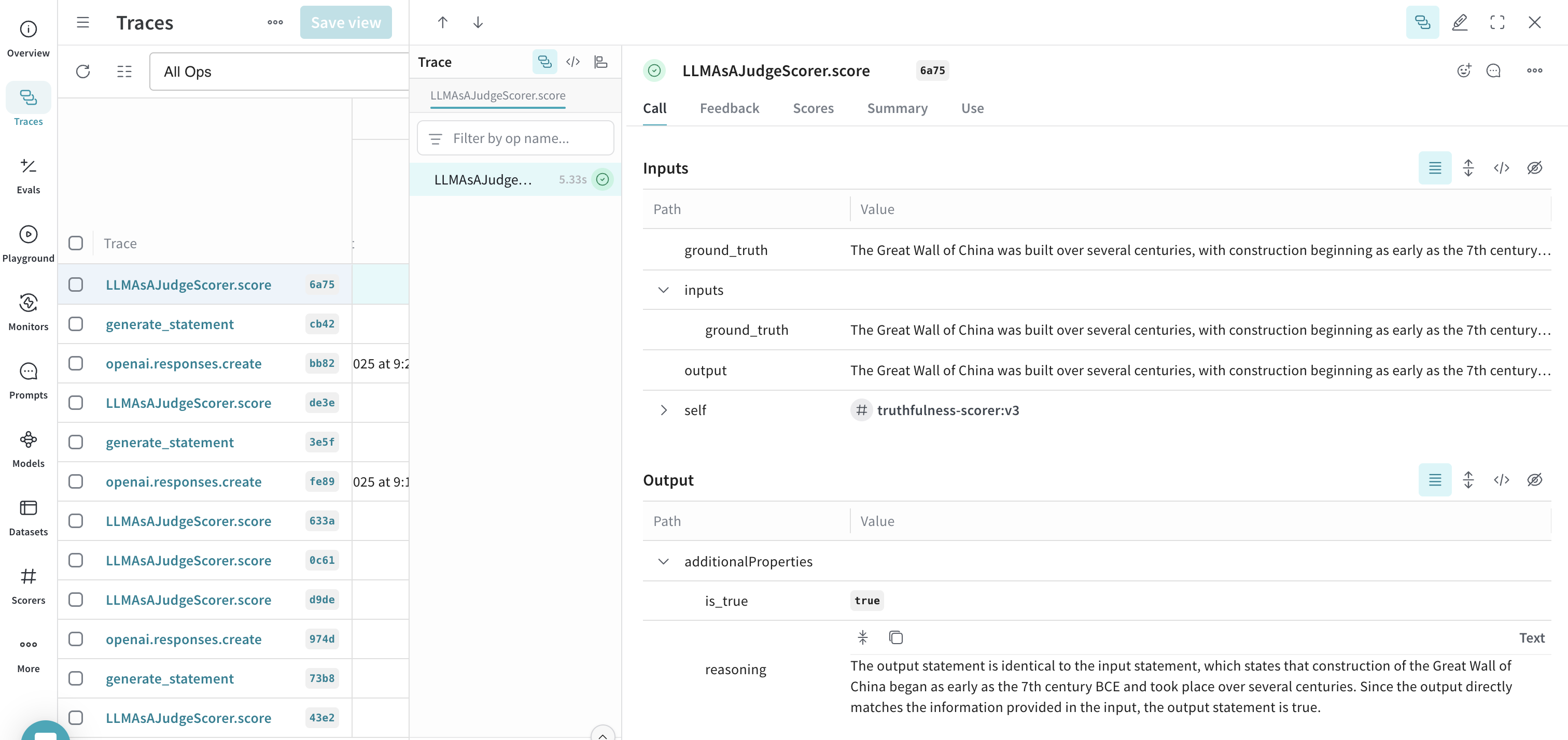

ground_truthstatements such as"Water freezes at 0 degrees Celsius.". - In the Weave UI, navigate to the Traces tab.

- From the list of available traces, select any trace for LLMAsAJudgeScorer.score.

- Inspect the trace to see the monitor in action. For this example, the monitor correctly evaluated the

output(in this instance, equivalent to theground_truth) astrueand provided soundreasoning.

Prompt variables

In scoring prompts, you can reference multiple variables from your op. These values are automatically extracted from your function call when the scorer runs. Consider the following example function:| Variable | Description |

|---|---|

{foo} | The value of the input argument foo |

{bar} | The value of the input argument bar |

{inputs} | A JSON dictionary of all input arguments |

{output} | The result returned by your op |

AWS Bedrock Guardrails

TheBedrockGuardrailScorer uses AWS Bedrock’s guardrail feature to detect and filter content based on configured policies. It calls the apply_guardrail API to apply the guardrail to the content.

To use the BedrockGuardrailScorer, you need the following:

- An AWS account with Bedrock access

- An AWS account with access to Bedrock

- A configured guardrail in the AWS Bedrock console

- The

boto3Python package

Implementation Details

The Scorer Interface

A scorer is a class that inherits fromScorer and implements a score method. The method receives:

output: The result from your function- Any input parameters matching your function’s parameters

Score Parameters

Parameter Matching Rules

- The

outputparameter is special and always contains the function’s result - Other parameters must match the function’s parameter names exactly

- Scorers can use any subset of the function’s parameters

- Parameter types should match the function’s type hints

Handling Parameter Name Mismatches

Sometimes your scorer’s parameter names might not match your function’s parameter names exactly. For example:column_map:

- Different naming conventions between functions and scorers

- Reusing scorers across different functions

- Using third-party scorers with your function names

Adding Additional Parameters

Sometimes scorers need extra parameters that aren’t part of your function. You can provide these usingadditional_scorer_kwargs:

Using Scorers: Two Approaches

- With Weave’s Op System (Recommended)

- Direct Usage (Quick Experiments)

- Use the op system for production, tracking, and analysis

- Use direct scoring for quick experiments or one-off evaluations

- Pros: Simpler for quick tests

- Pros: No Op required

- Cons: No association with the LLM/Op call

Score Analysis

For detailed information about querying calls and their scorer results, see our Score Analysis Guide and our Data Access Guide.Production Best Practices

1. Set Appropriate Sampling Rates

2. Monitor Multiple Aspects

3. Analyze and Improve

- Review trends in the Weave Dashboard

- Look for patterns in low-scoring outputs

- Use insights to improve your LLM system

- Set up alerts for concerning patterns (coming soon)

4. Access Historical Data

Scorer results are stored with their associated calls and can be accessed through:- The Call object’s

feedbackfield - The Weave Dashboard

- Our query APIs

5. Initialize Guards Efficiently

For optimal performance, especially with locally-run models, initialize your guards outside of the main function. This pattern is particularly important when:- Your scorers load ML models

- You’re using local LLMs where latency is critical

- Your scorers maintain network connections

- You have high-traffic applications

Complete Example

Here’s a comprehensive example that brings together all the concepts we’ve covered:- Proper scorer initialization and error handling

- Combined use of guardrails and monitors

- Async operation with parallel scoring

- Production-ready error handling and logging

Next Steps

- Explore Available Scorers

- Learn about Weave Ops